PaXini's Tora One Demonstrates Autonomous Mastery at CES 2026 with Ice Cream Service Task

Robot Details

Tora One • PaXiniPublished

February 17, 2026

Reading Time

3 min read

Author

Origin Of Bots Editorial Team

Industrial Robot Breaks Through

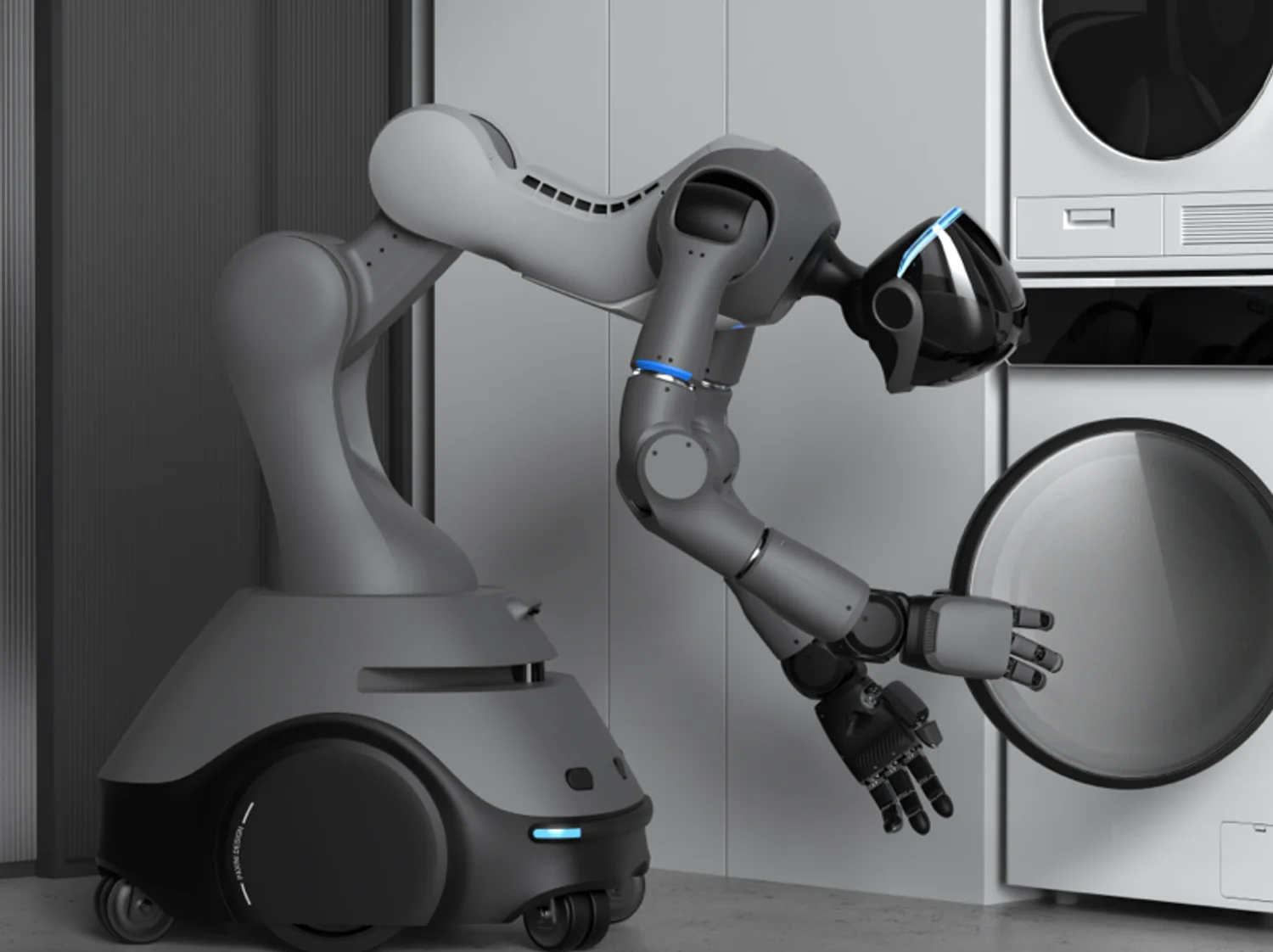

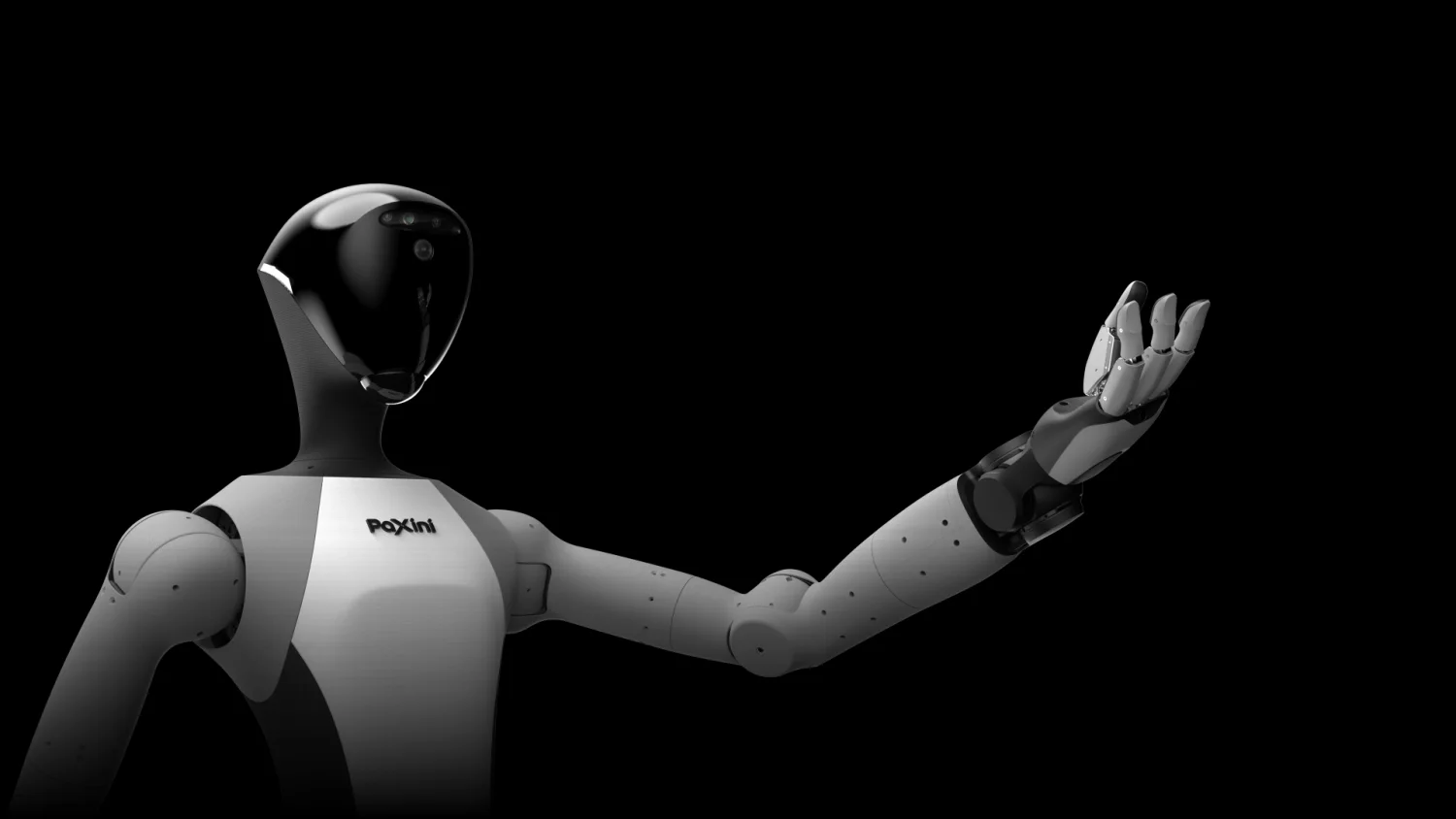

PaXini Tech unveiled the Tora One humanoid robot at CES 2026 in January, showcasing a system capable of executing complete autonomous workflows—from ice cream production to industrial pallet handling. Standing between 1.46 and 1.86 meters with 47 degrees of freedom, the robot represents a significant leap in tactile-driven manipulation, combining advanced sensory perception with precise motor control. The demonstration marks a turning point for embodied AI systems designed to operate alongside humans in real-world commercial environments, signaling that humanoid robots are transitioning from laboratory prototypes to deployable service platforms.

Touch Redefines Robot Precision

What distinguishes Tora One from earlier humanoid platforms is its integration of nearly 2,000 high-precision tactile sensors distributed across its four-fingered bionic hands, generating 7,824 tactile channels. These sensors capture multidimensional feedback—pressure, friction, softness, and temperature—enabling the robot to adjust grip force in real time based on object properties. This tactile feedback loop allows Tora One to handle fragile items like cups and delicate pastries with human-level sensitivity, while simultaneously managing industrial tasks requiring sustained force application. The sensory architecture transforms dexterous manipulation from pre-programmed motion sequences into adaptive, context-aware interactions.

Perception Meets Autonomous Navigation

Tora One's sensory architecture extends beyond touch to encompass comprehensive environmental understanding. The system integrates five monocular cameras, two depth cameras, and a 3D LiDAR suite supporting laser SLAM navigation with 360-degree obstacle detection. This multimodal perception stack enables the robot to map complex indoor spaces, identify objects from multiple angles, and plan collision-free paths autonomously. Real-time fusion of visual and tactile data through the proprietary VTLA-Model allows Tora One to recognize object geometry, predict grasp success, and execute manipulation tasks without human intervention—a critical capability for unstructured logistics and healthcare environments.

From Hospitality to Heavy Industry

The ice cream production demonstration at CES illustrated Tora One's capacity to execute end-to-end service workflows, but its practical deployment scope extends far beyond hospitality. In logistics, the robot handles pallet jacks and load transfers with 6 to 8 kg per-arm capacity and ±0.05 mm positioning accuracy. Healthcare applications include elderly support and assistance tasks requiring gentle, responsive interaction. Industrial settings benefit from its ability to perform precision assembly, wafer pick-and-place operations, and quality inspection tasks. The modular design and ROS2 compatibility enable rapid customization for sector-specific requirements, making Tora One adaptable across manufacturing, hospitality, healthcare, and supply chain operations.

Engineering the Interaction Layer

Tora One's skill architecture balances mobility, dexterity, and endurance to sustain extended human-robot collaboration sessions. The wheeled base achieves 1.0 m/s standard speed with a 50 x 40 cm footprint, enabling navigation through standard doorways and industrial aisles. Its 40 Ah battery supports 8 hours of continuous operation, sufficient for full-shift deployment in logistics hubs or healthcare facilities. The 70 kg baseline weight (up to 80 kg in heavy-duty configuration) provides stability during high-force manipulation while remaining light enough for safe collaborative work. Force-limiting actuators, collision detection, and emergency stop systems embedded throughout the kinematic chain ensure safe interaction with human coworkers. The NVIDIA AGX Orin processor handles real-time tactile processing, vision fusion, and autonomous navigation simultaneously—critical for robots operating in dynamic, unpredictable environments where pre-planned motions prove insufficient.

Versus Rivals Breakdown

| Robot | Strengths over Tora One | Tora One Advantages | Weaknesses vs. Tora One |

|---|---|---|---|

| AIMY | Lighter weight, faster deployment cycles | Advanced tactile sensing (1,956 sensors), 47 DoF, 8-hour runtime | Limited speed, fewer sensory channels |

| Henry | Specialized healthcare protocols, established clinical validation | Multidimensional tactile feedback, autonomous navigation, industrial versatility | Narrower application scope, less dexterous manipulation |

| Reflex Humanoid | Lower cost, simpler maintenance | Full-body 6-axis force/torque sensing, real-time grip adjustment, SLAM autonomy | Fewer degrees of freedom, reduced sensory resolution |

| W1 Pro | Compact form factor, warehouse optimization | Superior tactile resolution (7,824 channels), multimodal perception (vision + touch), ±0.05mm accuracy | Requires more training data, higher computational overhead |

Related Articles

APLUX Ultra Magnus Directs Traffic in Chengdu Robot Push

L7 Humanoid Masters Sword Dance at 4m/s Speed

NEURA Opens 4NE1 Mini Reservations for April 2026

PaXini’s TORA DoubleOne Conquers Obstacles at CES 2026

Learn More About This Robot

Discover detailed specifications, reviews, and comparisons for Tora One.

View Robot Details →